This year we were, unfortunately, eliminated from the competition quite early. So we used our time to do a small survey with all teams that participated this year in the Humanoid League Kid-Size (11 teams). In our opinion, there is still a lack of knowledge what other teams are doing. Thus, teams are trying to come up with solutions for problems that other teams have already solved. We hope that this small survey will help to mitigate this issue. Naturally, we only give a very abbreviated version here with almost all details missing. So please just talk to the corresponding teams to know more.

All teams in the league were happy to share their knowledge with us. We thank them for their openness, since otherwise this survey would not have been possible.

We also hope to continue this survey in the next years, which would also allow tracking the development of the league over time. Maybe it would also be possible to require filling out such a survey during registration for the next RoboCup.

The following abbreviations will be used:

BH: Bold Hearts

CIT: CIT Brains

HBB: Hamburg Bit-Bots

ICH: Ichiro

ITA: ITAndroids

NU: Nubots

RHO: Rhoban Footbal Club

RB: ROBIT

RF: RoboFei

KMU: Team-KMUTT

UA: UTRA Robosoccer

Participation in the Humanoid Virtual Season (HLVS)

We asked if teams will be participating in the next HLVS. The majority (CIT, HBB, ITA, NU, UA) have already participated and will participate again. BH and KMU are thinking to maybe start participating. ICH and RB said that they are already doing too many other competitions. Similarly, RHO and RF said that they have no time.

For any teams that want to create a Webots model of their robot, we would like to point you to these tools, which might help you:

Creating a URDF from a Onshape CAD assembly https://github.com/Rhoban/onshape-to-robot

Creating a Webots proto model from URDF: https://github.com/cyberbotics/urdf2webots

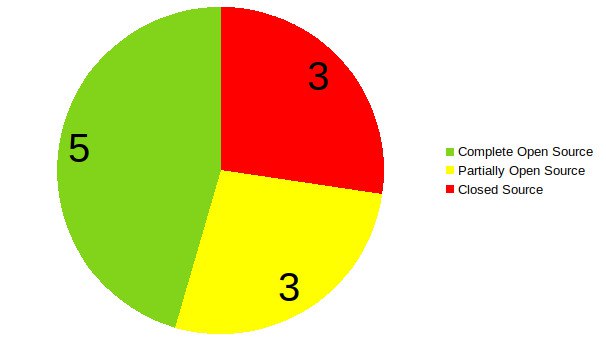

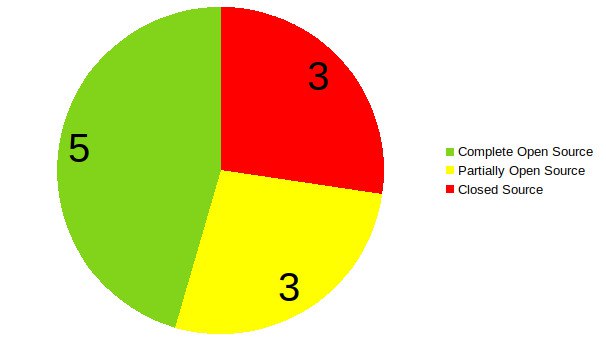

Open Source

The number of teams making their code and hardware (partially) open source is very high (see Figure). A list is given below. We also added direct links to specific parts in the following section if we knew them, but if there is no link provided, there might still be code available by the team, so have a look.

Software

Computer Vision

The rise of CNNs and deep learning has also affected the Humanoid League. Almost all teams use either some kind of YOLO or similar CNNs. The only exception is NU which use their own visual mesh approach.

HBB can also be noted as they not only use bounding box output but also segmentations for the field area and the lines. These are then later used as features for the localization.

Distance Estimation

Just detecting the different features on the camera image is not enough. We also need their position relative to the robot. One way to solve this issue is by circumventing it through working completely in image space (BH, RF). It is also possible to use the size of the ball on the image, as its real size is known. This is used by NU and UA.

The most common approach is (not surprisingly) inverse perspective mapping (IPM). Here the height of the camera (given through forward kinematics) and the angle (forward kinematics + IMU) are used together with an intrinsic camera calibration to project a point in image space to Cartesian space. A well tested and maintained package for ROS 2 can be found here: https://github.com/ros-sports/ipm

This year, no team used stereo vision to solve this problem.

| BH | Working in image space |

| CIT | IPM |

| HBB | IPM |

| ICH | Simplified IPM (no camera calibration, fixed height) |

| ITA | IPM |

| NU | IPM + ball size |

| RHO | IPM |

| RB | IPM |

| RF | Working in image space |

| KMU | IPM |

| UA | IPM + ball size |

Localization

Since localization is not necessarily needed to score a goal, it is neglected by many teams. There are four teams (BH, NU, RF, KMU) that don’t have a finished localization. ICH uses a simple approach based on the distance to the goals.

The most commonly used approach is the particle filter (CIT, HBB, ITA, RHO, UA) based on different features.

| BH | No localization |

| CIT | Particle filter (lines, intersections, goal posts) |

| HBB | Particle filter (line points) |

| ICH | Distance between goals |

| ITA | Particle filter (field boundary, goal posts) |

| NU | Working on particle filter (lines, goal posts) |

| RHO | Particle filter (line intersections, goal posts) |

| RB | Monte Carlo (intersections) |

| RF | No localization |

| KMU | No localization |

| UA | Particle filter (AMCL) |

Walking

One of the hardest parts for many teams is getting their robot to walk stably. Bipedal walking is still a very active research topic, and therefore it is not surprising that there are various approaches used in the league.

Many teams use some a pattern generator. Here quintic splines are mostly used. This is due to the fact that RHO wrote an open source walk controller called IKWalk that is now also used by BH. It was further improved by HBB who added automatic parameter optimization. This version is also used by NU.

Another approach is to use the Zero Moment Point (ZMP) (CIT, ITA).

One interesting idea that we got from ITA is the following: when using a PD controller based on IMU data to stabilize the robot it makes sense to use the direct values from the gyro (angular velocity) as the D value instead of a computed derivative (which is more noisy).

| BH | Rhoban IKWalk |

| CIT | ZMP based pattern generator |

| HBB | Quintic splines, IMU PD control, phase modulation, parameter optimization https://2022.robocup.org/download/Paper/paper_19.pdf |

| ICH | Darwin-OP walk controller with own additions |

| ITA | ZMP, gravity compensation, PD control |

| NU | Hamburg Bit-Bots walk |

| RHO | Cubic splines , foot pressure stabilization |

| RB | Pattern generator, IMU+foot pressure stabilization |

| RF | Sinoid |

| KMU | Pattern generator |

| UA | Hardcoded trajectories |

Getting Up

All teams except HBB use manual keyframe animations for their standing up motion. Many of them told us that they are annoyed by this as it requires a lot of retuning.

HBB is the only team that follows a completely different approach, which is very similar to their walking approach. It uses Cartesian quintic splines to generate the motion and PID controller to stabilize it. The parameters are optimized automatically and it can be transferred to other robots. If you are interested, you can have a look here:

https://www.researchgate.net/publication/356870627_Fast_and_Reliable_Stand-Up_Motions_for_Humanoid_Robots_Using_Spline_Interpolation_and_Parameter_Optimization

Kick

Similarly to the stand up motion, the kick is also solved by almost all teams using a keyframe animation.

There are two exceptions. First, HBB uses again an approach that similarly to their walking and getting up. It also allows defining the kick direction. We don’t have a paper about it (yet) but you can see a general overview here https://robocup.informatik.uni-hamburg.de/wp-content/uploads/2021/10/Praktikumsbericht.pdf

Another approach is used by ITA that uses also splines for the kick movement but stabilizes it using the ZMP.

Decision Making

Many teams still rely on simple approaches as state machines (BH, ICH, KMU, UA) or simple if-else combination, also called decision trees, (RB, RF).

Others use more sophisticated approaches like Hierachical Task Network planner (CIT) or behavior tress (ITA).

But some teams did also develop their own approaches. HBB developed a completely new approach that is somewhere between a behavior tree and a state machine. NU did a custom framework that is based on subsumption.

One team (RHO) did also use direct reinforcement learning.

It is also one of the software categories where there are the most different approaches used.

Hardware

Material

| BH | 3d printed, aluminum |

| CIT | Fiber glass, aluminum, TPU, PLA |

| HBB | Carbon fiber, stainless steel, aluminum, TPU, PLA |

| ICH | Aluminum, carbon fiber, stainless steel, PLA |

| ITA | Aluminum |

| NU | Aluminum (legs), 3d printed (rest) |

| RHO | Aluminum |

| RB | Aluminum |

| RF | Carbon fiber, aluminum, 3d printed |

| KMU | Aluminum |

| UA | 3d printed |

Controller Board

Many teams are still relying on old hardware from Robotis, such as the CM730. We also heard multiple complains about them, which aligns with our own experience with them.

Some teams also made their own solutions using some sort of microcontroller.

We have investigated this topic before (https://robocup.informatik.uni-hamburg.de/wp-content/uploads/2019/06/dxl_paper-4.pdf) and found a better solution (called QUADDXL) that allows much higher control rates and is less error prune. This was now also used in the new robot of CIT.

Camera

Most teams are using a Logitech webcam (C920 and similar ones). Some teams use industrial cameras. These have the advantage that they mostly have a global shutter and are more sensitive, allowing for a shorter shutter speed, thus reducing the blurriness of the images.

As connection USB and Ethernet are the two used options. We would like to hint to a known issue of USB3 Wifi interference https://ieeexplore.ieee.org/document/6670488

If you are interested how to integrate power over Ethernet in your robot, so that you only need a single cable for your Ethernet camera, you can have a look at our CORE board https://github.com/bit-bots/wolfgang_core

| BH | C920 |

| CIT | e-CAM50_CUNX |

| HBB | Basler ACE-ACA2040-35gc Ethernet + Cowa lense LM5NCL 1/1.8” 4mm C-Mount |

| ICH | C930 |

| ITA | C920 |

| NU | Flir Blackfly, fisheye, UBB3 |

| RHO | PointGrey Blackfly GigE camera |

| RB | C920 |

| RF | Lenovo, C920 soon |

| KMU | C920 |

| UA | C920 |

Leg Backlash

One of our current hardware issues was the extreme backlash in the legs, meaning that you can move the foot a lot while the motors are stiff. Therefore, we especially asked the teams about this too.

For most teams this is also one of their largest hardware issues. The problem comes from the backlash of the servos (e.g. 0.25° for a Dynamixel XH540 or 0.33° for a Dynamixel MX-106), from deformations in the leg parts and from the leg-torso connection.

One solution is using parallel kinematics like CIT, but this results in other issues (e.g. more complicated kinematics, not able to use URDF, …).

Some teams tried to keep serial kinematics and reduce the different factors that introduce the backlash. NU used new legs made of thick aluminum together with needle roller bearings (https://au.rs-online.com/web/p/roller-bearings/2346912 and https://www.robotis.us/hn05-t101-set/?_ga=2.226272029.682397097.1657712939-1062027158.1657712938). Similarly HBB, RHO and RB use bearings in the connection between legs and torso.

Infrastructure

Programming Language

| BH | C++, Python |

| CIT | C++, Python |

| HBB | C++, Python |

| ICH | C++, Python |

| ITA | C++; Python+Matlab for tools |

| NU | C++ |

| RHO | C++, some Python for RL |

| RB | C++ |

| RF | C++, Python |

| KMU | C++, Python |

| UA | Python |

Configuration Management

An often overseen point in software is that you do not only need your own code but also an operating system including a set of installed dependencies, network configurations, USB device configurations, etc. It can easily happen that the systems of the different robots diverges from each other. Also if a system needs to set up from scratch, it may require some work.

Some teams do the complete process manually, while others clone their disk images or have some small scripts that automate the process (partially).

We found that using a system like Ansible to do this is very helpful. It makes the process reproducible, reduces human errors and documents the process (reducing the truck factor).

| BH | Ansible |

| CIT | Partially automatic |

| HBB | Ansible |

| ICH | Script |

| ITA | Manual |

| NU | Script |

| RHO | Cloning image |

| RB | Manual |

| RF | Manual |

| KMU | Manual |

| UA | Manual |

Getting the code on the robot

At some point we need to get our software on the robot. While this seems to be a trivial thing, we were still curious how other teams are doing it.

One often used approach (CIT, ICH, iTA, RF) is to just use git on the robot to pull the newest software version. While this seems straight forward, it poses some issues in our opinion. First, all software needs to be committed to the git before it can be tested on the robot and, second, your robot always needs access to the git (so internet access).

Some teams (NU, RHO) just copy the compiled binaries on their robot. We did something similar in the past, but since our robots have faster CPUs than our laptops, we decided on switching to copying it to the robot and building there. This is also done by BH, RB, and KMU.

While many teams just use a normal scp (ssh copy), HBB, RHO and NU use rsync with the option to only copy modified files, which optimizes the time needed for getting the code onto the robot. We also use a small script to facilitate the process. You can find it here https://github.com/bit-bots/bitbots_meta/blob/master/scripts/robot_compile.py

| BH | scp |

| CIT | git |

| HBB | rsync + build on robot via custom script |

| ICH | git |

| ITA | git |

| NU | compile on laptop + copy via rsync |

| RHO | compile on laptop + rsync |

| RB | Copy + build on robot |

| RF | git |

| KMU | scp + compile on robot |

| UA | docker |

Testing and Continuous Integration

Many teams (CIT, ITA, RHO, RB, RF, KMU) don’t use any kind of automated testing system or CI. The most commonly used approach are Github Actions (ICH, NU, UA).

| BH | CI for unit tests |

| CIT | No testing or CI |

| HBB | Had some tests and CI for previous ROS 1 version but not transferred to new ROS 2 version yet. Jenkins was used as platform |

| ICH | Github Actions |

| ITA | No testing or CI |

| NU | Building and tests with Github Actions |

| RHO | No CI but manual testing script for robot hardware |

| RB | No testing or CI |

| RF | No testing or CI |

| KMU | No testing or CI |

| UA | Github Actions |

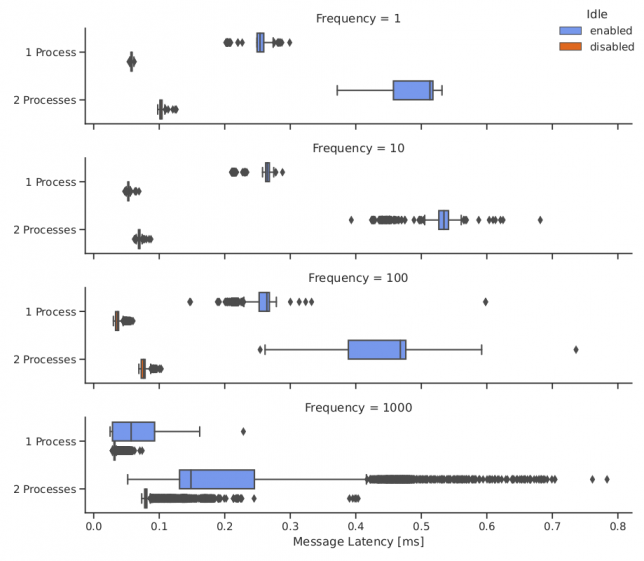

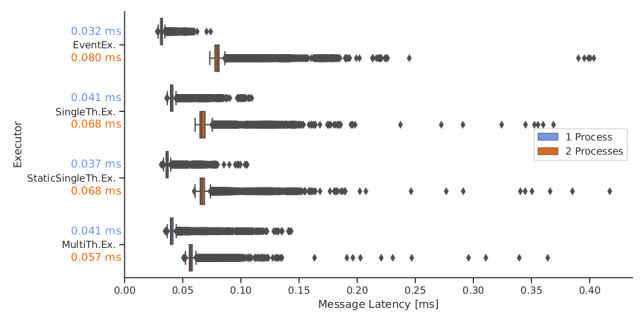

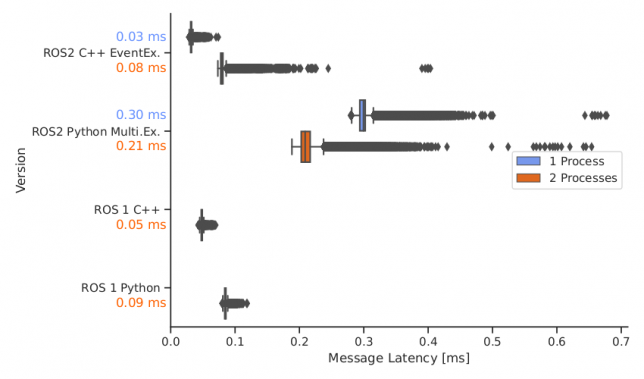

Usage of ROS

ROS seems to have a continuous rise in usage in the Humanoid League. This follows observations that were already done in other leagues (e.g. @Home, rescue), whereby now the large majority of teams use it. Additionally, there is now the question if teams are still using ROS 1 which is now in its last version or have already switched to ROS 2.

We just switched from ROS 1 to ROS 2 and have identified an array of issues, which are probably coming from the fact that it has not been used a lot for humanoid robots until now. But we will create another article about that.

Interesting to see is that some teams only use ROS for logging or debugging. This is probably a good approach to profit from some of ROS strongest advantages without needing to rewrite a lot of the own code base.

| BH | ROS 2 Foxy |

| CIT | Only for logging |

| HBB | ROS 2 Rolling |

| ICH | No but plan on using it |

| ITA | ROS 1 but are switching to ROS 2 |

| NU | No, have their own system Nuclear |

| RHO | No |

| RB | ROS 1 |

| RF | ROS 2 Foxy |

| KMU | ROS 2 |

| UA | ROS 1 |

Operating System

Almost all teams use some version of Ubuntu on their robot. The only exception is NU that use a custom Arch Linux.

No team uses a real time kernel. But we heard from multiple teams that they were having issues with their motions (especially the walking) not running smoothly when the whole software stack was started. In our experience this is an issue with the default Linux scheduler which will move your motion processes around between cores and interrupt it to run other processes. Some teams try to avoid this by setting the nice level of the important processes. For us this was not enough to solve the problem. We assign our processes to different CPU cores via “taskset” and make sure that the scheduler does not use these by setting “isolcpus”. This approach worked well for us.

We will explore the usage of real time kernels in the future.

Organization

We also asked how teams are organizing themselves. Some teams (CIT, HBB, ITA, NU, RB, RF, UA) do weekly meetings for organization and/or working. Almost all teams are using Git (with GitHub being the most commonly used provider). The only exception is RB that uses Slack to exchange their code between each other.