Last year we got the opportunity to participate in the Running Robot Competition in China. Since we enjoyed getting to participate in it last year, we wanted to attend again this year.

The Running Robot Competition is not a part of the RoboCup tournaments we usually participate in. It is a tournament where Robots have to go through a course, overcoming multiple obstacles to reach their goal. These obstacles are for example having to wait until a barrier has moved out of the way and only then walk past it, or having to climb a few stairs.

Due to Covid-19 we did not get the opportunity to participate in person, but we were glad to participate over the internet. For this purpose, the organizers prepared a simulation environment.

We discussed a few options on how to solve this.

Starting from a more conventional approach to solve the challenges. In this approach, we would start with developing a vision that can e.g. see the yellow and black from the barrier and as soon as it reaches a certain angle, we would program the robot to start walking.

However we wanted to use this opportunity to learn about something that most of our team had less experience with. This is why we decided to use reinforcement learning for our approach.

Reinforcement learning is an approach where we use neural networks so the robot can learn how to overcome the obstacles without us telling it how to solve them. This can lead to more efficient ways of solving the problem than we would have thought of. This could include finding a bug in the physics of the simulator to achieve normally impossible things or something as simple as walking another way that we did not think of.

We used only the robot’s camera image as an input to the reinforcement learning algorithm. Based on this information the robot had to figure out what it had to do now. Does it have to wait for the barrier to rise or is it already up and it can start walking?

This sounds trivial for us as a human, but the robot starts out with no concept of a barrier being an obstacle. In the beginning, it does not know the difference between the ground which it can step on and an obstacle that it should avoid.

To ease the robot to learn these things, we provided points as a reward to the robot if it came closer to the goal and deducted points if it walked into an obstacle like the barrier.

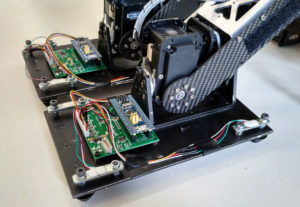

The robot could control the direction in which it walked. We used the same program to let the robot walk as we use on our soccer-playing robots. However since a different robot was used in this competition, we had to change a few parameters. However, we were able to use a script from our existing walking to let the robot learn the best way to walk in this new simulation.

With this approach, we were able to solve the first obstacles. Due to a misunderstanding, we had less time and were not able to solve the full course.

However we still had a lot of fun in developing our approach to solve the problems the Running Robot Competition presented. We also had the opportunity to learn a lot about reinforcement learning.

As you probably have figured out from reading the headline already, we did not only have a lot of fun during the competition, we even managed to finish in third place in the competition!